ARS

latest results

The ARS project started with the ambitious goal of defining a framework for robotic autonomy in the context of surgery. I.e. how can an artificial device, such as a robot, interact, and at times replace, a human in a difficult and delicate task like surgery.

To make the project manageable, the initial development was organized according to five main research directions, i.e. the project objectives, which represent the main pillars of autonomy:

1. THE FORMAL REPRESENTATION OF A SURGICAL INTERVENTION

The first objective has been reached by developing a representation method based on logical rules and by linking this representation with the textual description of interventions found in surgical textbooks.

2. HOW TO PLAN A ROBOTIC INTERVENTION

The second objective has been reached by devising learning methods that refine and personalize a procedure for a given anatomical environment.

3. HOW TO CONTROL THE ROBOT MOTIONS

The third objective lead to the refinement of methods to learn the motion primitives of each surgical action. Surgical awareness is achieved by a combination of simulation, representing the a priori understanding of the intervention, and sensor feedback giving the robot the quantitative data about the environment, i.e.where are the instruments with respect to the organs, what are the fixed points of the anatomy, what are the organs subject to biological motions, etc.

4. HOW TO ENDOW A ROBOT OF AWARENESS OF ITS ACTIONS and 5. HOW TO DEMONSTRATE THE DEVICE CAPABILITIES IN REALISTIC ENVIRONMENTS

A first integration of these results of the research was demonstrated at mid-project with the autonomous execution of a training task, the peg and ring task that is directly comparable with how a surgeon in training performs the same task. We are now further integrating all these methods into the simulation of a kidney intervention, more clinically relevant than the demonstrations done so far.

1.

The formal representation of a surgical intervention

Extracting knowledge from text, Marco Bombieri, Ph.D.

Robot-Assisted minimally invasive surgery is the gold standard for the surgical treatment of many pathological conditions, and several manuals and academic papers describe how to perform these interventions. These high-quality, often peer-reviewed texts are the main study resource for medical personnel and consequently contain essential procedural domain-specific knowledge.

The procedural knowledge therein described could be extracted and used to develop clinical decision support systems or even automation methods for some procedure’s steps.

However, natural language understanding algorithms such as, for instance, semantic role labelers have lower efficacy and coverage issues when applied to domain others than those they are typically trained on (i.e., newswire text).

The Robotic Surgery Procedural Framebank

To overcome this problem, starting from PropBank frames, we propose a new linguistic resource specific to the robotic-surgery domain, named Robotic Surgery Procedural Framebank (RSPF).

We extract from robotic-surgical texts verbs and nouns that describe surgical actions and extend PropBank frames by adding any of new lemmas, frames or role sets required to cover missing lemmas, specific frames describing the surgical significance, or new semantic roles used in procedural surgical language.

Our resource is publicly available and can be used to annotate corpora in the surgical domain to train and evaluate Semantic Role Labeling (SRL) systems in a challenging fine-grained domain setting.

- Natural Language Processing 90%

- Ontologies and semantics 80%

- Language extraction 90%

2.

How to plan a robotic intervention

A mathematical model of the intervention, Michele Ginesi, Ph.D.

Dynamic Movement Primitives

Full and partial automation of Robotic Minimally Invasive Surgery holds significant promise to improve patient treatment, reduce recovery time, and reduce the fatigue of the surgeons. However, to accomplish this ambitious goal, a mathematical model of the intervention is needed.

We propose to use Dynamic Movement Primitives (DMPs) to encode the gestures a surgeon has to perform to achieve a task.

DMPs allow to learn a trajectory, thus imitating the dexterity of the surgeon, and to execute it while allowing to generalize it both spatially (to new starting and goal positions) and temporally (to different speeds of executions).

Moreover, they have other desirable properties that make them well suited for surgical applications, such as online adaptability, robustness to perturbations, and the possibility to implement obstacle avoidance.

We propose various modifications to improve the state-of-the-art of the framework, as well as novel methods to handle obstacles. Moreover, we validate the usage of DMPs to model gestures by automating a surgical-related task and using DMPs as the low-level trajectory generator.

- Mathematical model 90%

- Dynamic Movement Primitives 80%

- Library of actions and gestures 90%

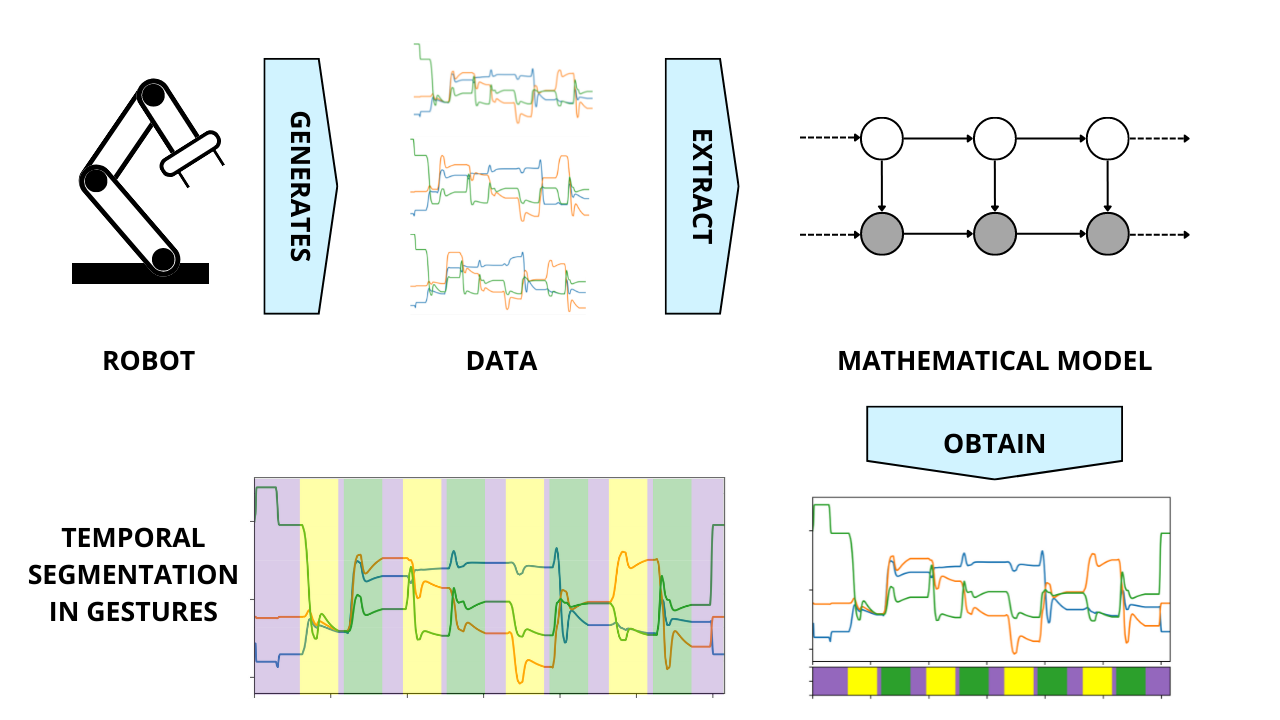

Unsupervised segmentation of tasks’ execution in gestures

In the second part of our research, we introduce the problem of unsupervised segmentation of tasks’ execution in gestures. We will introduce latent variable models to tackle the problem, proposing further developments to combine such models with the DMP theory. We will review the Auto-Regressive Hidden Markov Model (AR-HMM) and test it on surgical-related datasets. Then, we will propose a generalization of the AR-HMM to general, nonlinear, dynamics, showing that this results in a more accurate segmentation, with a less severe over-segmentation.

Finally, we propose a further generalization of the AR-HMM that aims at integrating a DMP-like dynamic into the latent variable model.

3.

How to control the robot motions

Patient-specific simulation for autonomous surgery, Eleonora Tagliabue, Ph.D.

An Autonomous Robotic Surgical System (ARSS) has to interact with the complex anatomical environment, which is deforming and whose properties are often uncertain. Within this context, an ARSS can benefit from the availability of patient-specific simulation of the anatomy. For example, simulation can provide a safe and controlled environment for the design, test and validation of the autonomous capabilities. Moreover, it can be used to generate large amounts of patient-specific data that can be exploited to learn models and/or tasks.

The aim of this research work is to investigate the different ways in which simulation can support an ARSS and to propose solutions to favor its employability in robotic surgery.

How simulation can support ARSS and its employability in robotic surgery?

We first address all the phases needed to create such a simulation, from model choice in the pre-operative phase based on the available knowledge to its intra-operative update to compensate for inaccurate parametrization. We propose to rely on deep neural networks trained with synthetic data both to generate a patient-specific model and to design a strategy to update model parametrization starting directly from intra-operative sensor data.

Afterwards, we test how simulation can assist the ARSS, both for task learning and during task execution.

We show that simulation can be used to efficiently train approaches that require multiple interactions with the environment, compensating for the riskiness to acquire data from real surgical robotic systems.

Finally, we propose a modular framework for autonomous surgery that includes deliberative functions to handle real anatomical environments with uncertain parameters. The integration of a personalized simulation proves fundamental both for optimal task planning and to enhance and monitor real execution.

The contributions presented in this Thesis have the potential to introduce significant step changes in the development and actual performance of autonomous robotic surgical systems, making them closer to applicability to real clinical conditions.

- Pre operative modelling 90%

- Training methods 80%

- Deep neural networks 90%

3.

How to control the robot motions

Medical SLAM in an autonomous robotic system, Andrea Roberti Ph.D.

In minimally invasive surgery (MIS), optical techniques are an increasingly attractive approach for in vivo 3D reconstruction of the soft-tissue surface geometry. My thesis addresses the ambitious goal of achieving surgical autonomy, through the study of the anatomical environment by initially studying the technology present and what is needed to analyze the scene: vision sensors.

We present a novel endoscope for autonomous surgical task which combines a standard stereo camera with a depth sensor. This solution introduces several key advantages, such as the possibility of reconstructing the 3D at a greater distance than traditional endoscopes. In the second part of the thesis the problem of the 3D reconstruction and the algorithms currently in use were addressed. In MIS, simultaneous localization and mapping (SLAM) can be used to localize the pose of the endoscopic camera and build ta 3D model of the tissue surface. Another key element for MIS is to have real-time knowledge of the pose of surgical tools with respect to the surgical camera and underlying anatomy. Starting from the ORB-SLAM algorithm we have modified the architecture to make it usable in an anatomical environment by adding the registration of the pre-operative information of the intervention to the map obtained from the SLAM. Once it has been proven that the slam algorithm is usable in an anatomical environment, it has been improved by adding semantic segmentation to be able to distinguish dynamic features from static ones.

- Vision sensors 90%

- 3D Modelling 80%

- SLAM 90%

4. – 5.

How to endow a robot of awareness of its actions and How to demonstrate the device capabilities in realistic environments.

Full (supervised) autonomy in surgery, Daniele Meli, Ph.D.

This research work addresses the long-term goal of full (supervised) autonomy in surgery, characterized by dynamic environmental (anatomical) conditions, unpredictable workflow of execution and workspace constraints.

The scope is to reach autonomy at the level of sub-tasks of a surgical procedure, i.e. repetitive, yet tedious operations (e.g., dexterous manipulation of small objects in a constrained environment, as needle and wire for suturing).

This will help reducing time of execution, hospital costs and fatigue of surgeons during the whole procedure, while further improving the recovery time for the patients.

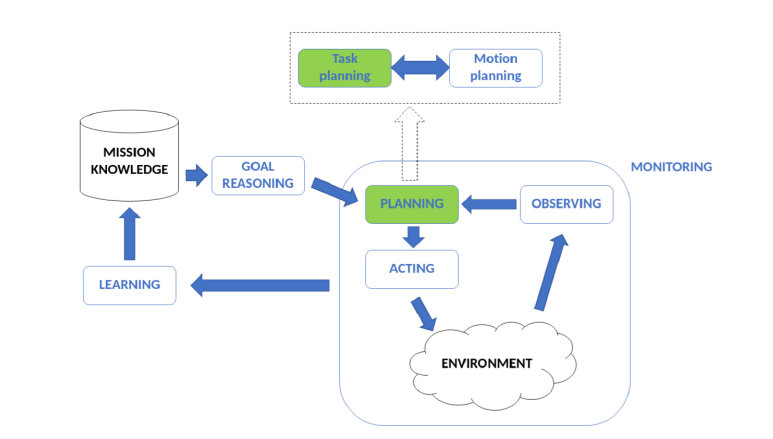

A novel framework for autonomous surgical task execution

A novel framework for autonomous surgical task execution is presented in the first part of this thesis, based on answer set programming (ASP), a logic programming paradigm, for task planning (i.e., coordination of elementary actions and motions). Logic programming allows to directly encode surgical task knowledge, representing plan reasoning methodology rather than a set of pre-defined plans.

This solution introduces several key advantages, as

• reliable human-like interpretable plan generation

• real-time monitoring of the environment and

• the workflow for ready adaptation and failure recovery.

Moreover, an extended review of logic programming for robotics is presented, motivating the choice of ASP for surgery and providing a useful guide for robotic designers.

- Logic programming 90%

- Dynamic Movement Primitives 80%

- Library of actions and gestures 90%

A novel framework based on ILP

In the second part of the thesis, a novel framework based on inductive logic programming (ILP) is presented for surgical task knowledge learning and refinement. ILP guarantees fast learning from very few examples, a common drawback of surgery. Also, a novel action identification algorithm is proposed based on automatic environmental feature extraction from videos, dealing for the first time with small and noisy datasets collecting different workflows of executions under environmental variations. This allows to define a systematic methodology for unsupervised ILP.

All the results in this thesis are validated on a non-standard version of the benchmark training ring transfer task for surgeons, which mimics some of the challenges of real surgery, e.g. constrained bimanual motion in small space.

Figure: The functions of a deliberative robot.